AWS Deployment

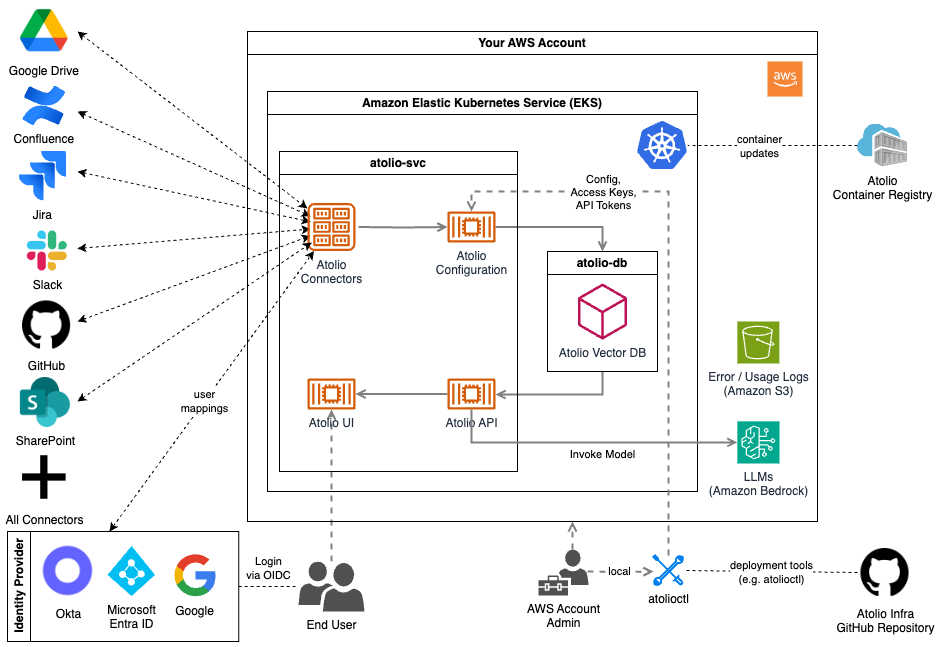

Architecture

Deployment Prerequisites

In order to get started, your Atolio support team will do the following on your behalf:

- Grant your AWS account access to the Client ECR repos (for pulling Docker images).

- Add your Deployment Engineer as a collaborator to the Atolio GitHub repository (lumen-infra), which contains:

- Deployment documentation

- Terraform for the Atolio stack infrastructure

- Configuration files for Atolio services

- Maintenance scripts

The following deployment prerequisites will help streamline your deployment process.

Determine AWS account

You can either choose to deploy Atolio into an existing AWS account or a new account. Atolio also supports deploying to your own AWS Virtual Private Cloud (VPC). When the account is available, share the AWS account number with your Atolio support team.

We recommend:

- Ensuring that Service Quotas within your AWS account allow for a minimum of 64 vCPU for On-Demand Standard instances.

- Raising any other organizational AWS policies / restrictions (e.g. networking, containers) with your Atolio support team ahead of the deployment call.

Determine Deployment Model

We offer both Atolio managed and customer managed deployment models for you to choose from. Please review the comparison and requirements for each approach on our Deployment Model Overview page and inform your Atolio support team which method you’d like to use for your deployment.

Atolio Managed Deployment Prerequisites

The exact permissions being delegated will be presented to the engineer running the script prior to executing. The IAM policies included are:

arn:aws:iam::aws:policy/PowerUserAccess

arn:aws:iam::aws:policy/IAMFullAccess

Access will be limited to a client support machine that is only accessible to Atolio support engineers and that has a static IP of 52.43.209.253 assigned to aid in identifying activity from Atolio’s team.

If you opt to allow Atolio’s deployment support team to manage the deployment on your behalf the steps to enable this for your AWS account are as follows:

Clone our lumen-infra GitHub repository

git clone git@github.com:atolio/lumen-infra.gitRun our AWS support role script against the AWS account you’d like us to deploy into

./lumen-infra/deploy/terraform/aws/scripts/atolio-support-role.shReview the output from the script and provide the Role ARN to your Atolio support team

Operation completed successfully. Role ARN: arn:aws:iam::123456789012:role/AtolioDeploymentAccess

Determine Atolio DNS name

Before the deployment call, you may want to decide on your desired Atolio web location. An AWS Route 53 hosted zone will be created in the AWS account used for hosting the Atolio stack (e.g. search.example.com.): this will be the DNS name (without the trailing dot) for the Atolio web application (e.g. https://search.example.com)

This hosted zone allows the deployment (i.e. the External DNS controller) to add records to link host names (e.g. search.example.com, feed.search.example.comand relay.search.example.com) to the load balancer as created by the AWS ALB controller.

For the remainder of this document, we will use https://search.example.com in the examples, but it is expected for you to replace with your own DNS name.

Determine Cloud Networking Options

By default, Atolio’s Terraform code will create a VPC. However, you may choose to use an existing VPC and subnets within your AWS account. In this case, set create_vpc to false.

Then, configure all VPC related variables. See below sample:

// Uncomment these lines and update the values in case you want to deploy in a

// pre-existing VPC (by default a new VPC will be created).

//

// Note that automatic subnet discovery for the ALB controller will only work

// if the subnets are tagged correctly as documented here:

// https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.7/deploy/subnet_discovery/

// create_vpc = false

// vpc_id = "vpc-000"

// vpc_cidr_block = "10.0.0.0/16"

// vpc_private_subnet_ids = ["subnet-1111", "subnet-2222"]

// vpc_public_subnet_ids = ["subnet-3333", "subnet-4444"]

// vespa_az = "us-west-2a"

// vespa_private_subnet_id = "subnet-1111"

Additional notes regarding existing VPC usage:

- As per above sample, subnets must be tagged corectly as documented in subnet discovery.

- When specifying

vespa_private_subnet_id, the referenced subnet ID must also be in thevpc_private_subnet_idsarray. - In terms of VPC sizing, the default (

10.0.0/16) is currently oversized. For reference, VPC Subnet IP addresses are primarily allocated to the EKS cluster and ALB, with AWS reserving several for internal services. We recommend a subnet of /24 (256 IPs) as the minimum to ensure enough available IP addresses for Kubernetes to assign to pods. - Ensure specified subnets have available IPv4 Addresses.

If custom networking configuration will be necessary be sure to provide these details to the engineer performing the deployment.

Setup authentication

Atolio supports single sign-on (SSO) authentication through Okta, Microsoft Entra ID, and Google using the OpenID Connect (OIDC) protocol.

Refer to Configuring Authentication for more details on the steps to complete in your desired SSO provider in order to obtain the necessary OIDC configuration values.

Setup local environment

Finally, if an engineer from your team will be performing the deployment, ensure they have the following utilities installed:

- Setup Terraform on your local machine as described on the HashiCorp docs site - we require v1.5.0 at a minimum.

- Install the AWS Command Line Interface

- Install kubectl

- Install Helm

- Install atolioctl

Provide Deployment Engineer with Configuration

At this point if you’re proceeding with an Atolio managed deployment you’ll need to provide the information from these prerequisite steps to your Atolio deployment support team. Otherwise please ensure the information is provided to the engineer from your organization who will be performing the deployment with Atolio’s support.

To recap, provide these details:

If you’re opting to have Atolio manage the deployment you can disregard the remainder of this documentation as these steps will be performed by your Atolio deployment engineer. Otherwise be sure to share this documentation with the engineer from your organization that will be performing the deployment so they can familarize theirself with the steps required.

Create Cloud Infrastructure

The Terraform configuration requires an external (S3) bucket to store state. A script is available to automate the whole process (including running Terraform). Before running the script, create a config.hcl file based on the provided config.hcl.template:

cd deploy/terraform/aws

cp ./config.hcl.template config.hcl

Atolio Domain Name

Update the copied file with appropriate values. At a minimum, it should look something like this:

# Domain name for Atolio stack (same as hosted zone name without trailing ".")

lumen_domain_name = "search.example.com"

Application Helm Value Options

Next copy the Helm template and update the values as directed.

cp ./templates/values-lumen-admin.yaml values-lumen.yaml

cp ./templates/values-vespa-admin.yaml values-vespa.yaml

# Default values for lumen.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates (provided by admin user).

# JWT secret key for API call (signature) verification (at least 256 bits / 32 chars)

# Can be generated by running `openssl rand -base64 32` in your terminal

jwtSecretKey: "add-your-jwt-secret-key-here"

# Secret salts for generating Vespa document IDs

# Can be generated by running `openssl rand -base64 32` in your terminal

secretSalts: "add-your-secret-salts-here"

# See also scripts/config-oidc.sh helper script to obtain some of the values below

oidc:

provider: "add-your-provider-here"

endpoint: "add-your-endpoint-here"

clientId: "add-your-id-here"

clientSecret: "add-your-secret-here"

# If running behind a reverse proxy, this should be set to the URL the end user will

# use to access the product.

reverseProxyUrl: ""

# The ACME Cluster Issuer for LetsEncrypt requires an email address to be provided

# for certificate notifications. This is required for LetsEncrypt certificates to work properly.

# Common examples would be an admin or technical support email address for your organization. ex: "admin@example.com" or "engineering@example.com".

letsencrypt:

email: "letsencrypt@example.com"

For the jwtSecretKey and secretSalts values any 256 bit (32 character) string can be used. These values are used to sign JWT tokens used by the web application and atolioctl tool and salt document IDs in Atolio’s database. They should be well guarded secrets that are unique to the deployment.

If your users will be accessing the web interface via a reverse proxy (e.g. such as StrongDM), then be sure to set the reverseProxyUrl field to reflect the URL they will actually enter into their browser to access Atolio, which will be different to the hostname defined in lumen_domain_name. Leave this field empty if not using a reverse proxy.

Deployment with create-infra.sh script

Once you have all variables configured, you can create the infrastructure and deploy the k8s cluster. From the deploy/terraform/aws directory:

./scripts/create-infra.sh --name=deployment-name

This will create the infrastructure in the us-west-2 AWS region. If you want to deploy in another region parameter (e.g. us-east-1) an additional parameter can be provided:

./scripts/create-infra.sh --name=deployment-name --region=us-east-1

The deployment-name argument is used to generate a deployment name for e.g. tagging resources and naming e.g. the kubernetes cluster and S3 buckets. So make sure it is unique across all deployments. (i.e. using a globally unique deployment name). Typically this is set to your company name with an optional suffix if specifying environment (e.g. acmecorp or acmecorp-qa).

The script automates the following steps (parameterized based on the provided deployment name):

- Create an S3 bucket to store Terraform state

- Create a terraform.tfvars file for Terraform

- Run

terraform init - Run

terraform apply(using input variables in generated terraform.tfvars)

Post-deployment steps

With the infrastructure created, you’ll want to update your local kubeconfig with a context for the Atolio cluster (this is also output via Terraform as update_kubeconfig_command):

aws --profile {atolio profile} eks update-kubeconfig --region us-west-2 --name lumen-{deployment-name}

Delegate responsibility for Atolio subdomain

The parent domain (e.g. example.com) needs to delegate traffic to the new Atolio subdomain (search.example.com). This is achieved by adding an NS record to the parent domain with the 4 name servers copied from the new subdomain (similar to what is described here).

These nameservers can be retrieved post-creation with terraform output:

terraform output --json name_servers | jq -r '.[]'

Synchronize ACME Certificate to ACM

AWS Load Balancers are configured with a certificate issued by ACM. This certificate needs to be updated when the ACME certificate changes. An automated cronjob is scheduled to update the certificate every hour. It can be helpful to run this job manually after the initial deployment to ensure the certificate is up-to-date prior to the first scheduled job. You can use kubectl to do so:

kubectl -n atolio-svc create job --from=cronjob/acm-sync acm-sync-initial-run

At this point you should be able to interact with the kubernetes cluster, e.g.

kubectl get po -n atolio-svc

Note, Atolio specific services run on the following namespaces:

- atolio-svc (Services)

- atolio-db (Database)

- atolio-ctl (Control Plane)

When you have validated that the infrastructure is available, the next step is to configure sources.